Right now there’s nothing cooler than saying you’re building a company powered by AI or on top of GPT4. But cool on its own isn’t enough. Jack Dorsey didn’t raise money for Twitter by saying they were building a social media site on Heroku.

When Spanning Labs (Drew’s company) was building web3 infrastructure, we found ourselves constantly needing to explain that we were not technology in search of a problem, but rather, the other way around. Too many people had spent too many years building and investing in web3 technologies instead of businesses. We see a similar pattern emerging in generative AI today.

Generative AI is a powerful toolset. It does change the game. But it is not the end game.

Here’s a double-edged sword: It’s never been easier to build in AI. On the (huge) upside, this means we can now take business ideas that weren’t possible before (or were only linearly scalable before) and turn them into great, fund-returning investments. Existing companies are also leveraging AI to build new efficiencies that let them go further, faster. This is all extremely exciting. On the downside: There’s also a lot of new garbage to sift through.

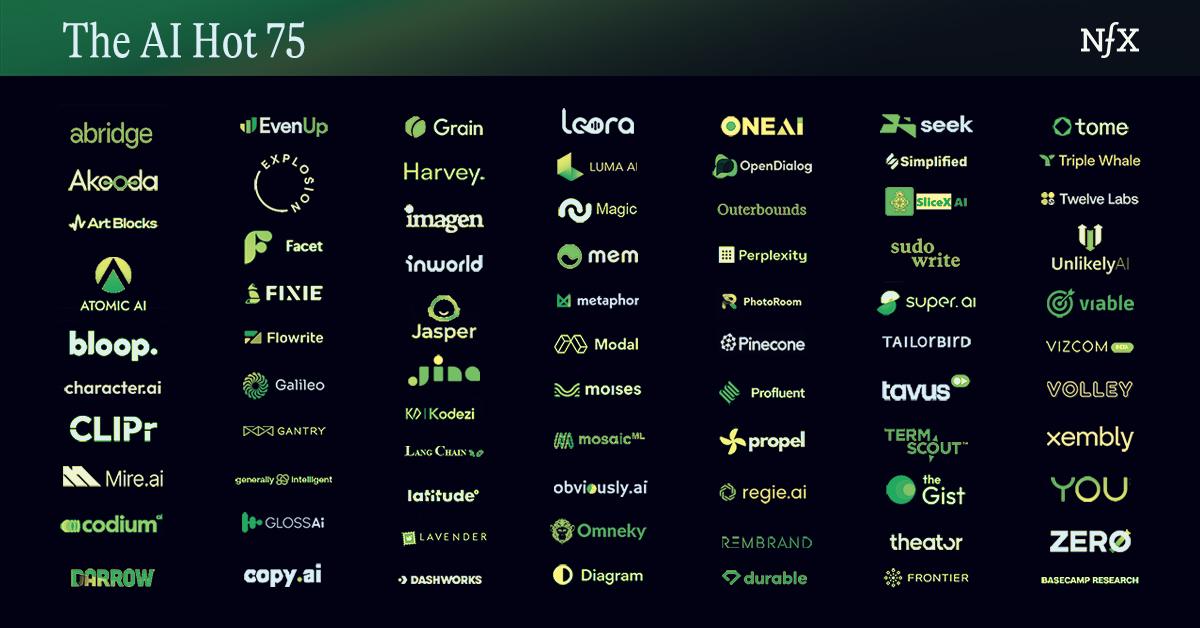

We were recently at a demo day where three companies sounded promising – then we looked at the open source libraries that they had just built skins on top of. Right now it can be tricky to know what is hard, unique, and defensible – versus a weekend project built on top of an open source project. Founders and investors, especially during a moment of exponential growth and consensus, need to run through a few litmus test questions when they are evaluating the next generative AI company.

Fortunately, if you know where to look, there are ways a small team can build something hard, unique, and defensible in AI today. So we (Morgan and Drew; yes, we’re siblings) put our heads together over a recent family dinner to compile notes – and red flags – for founders and investors who are respectively pitching and evaluating new startups building in generative AI.

It’s just AI inside

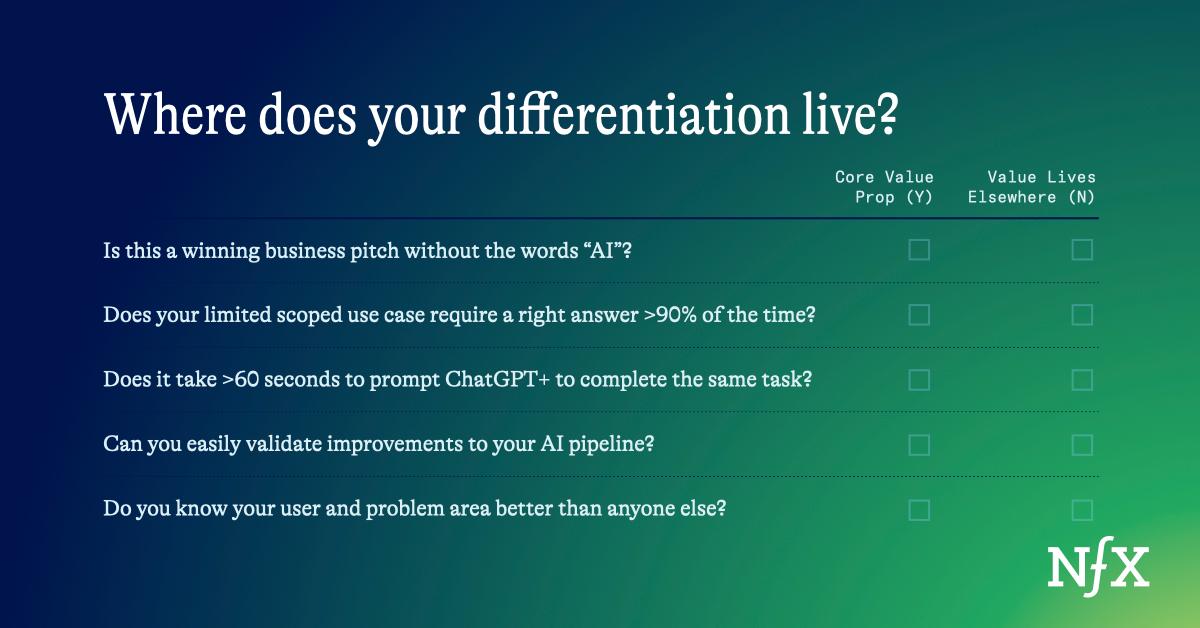

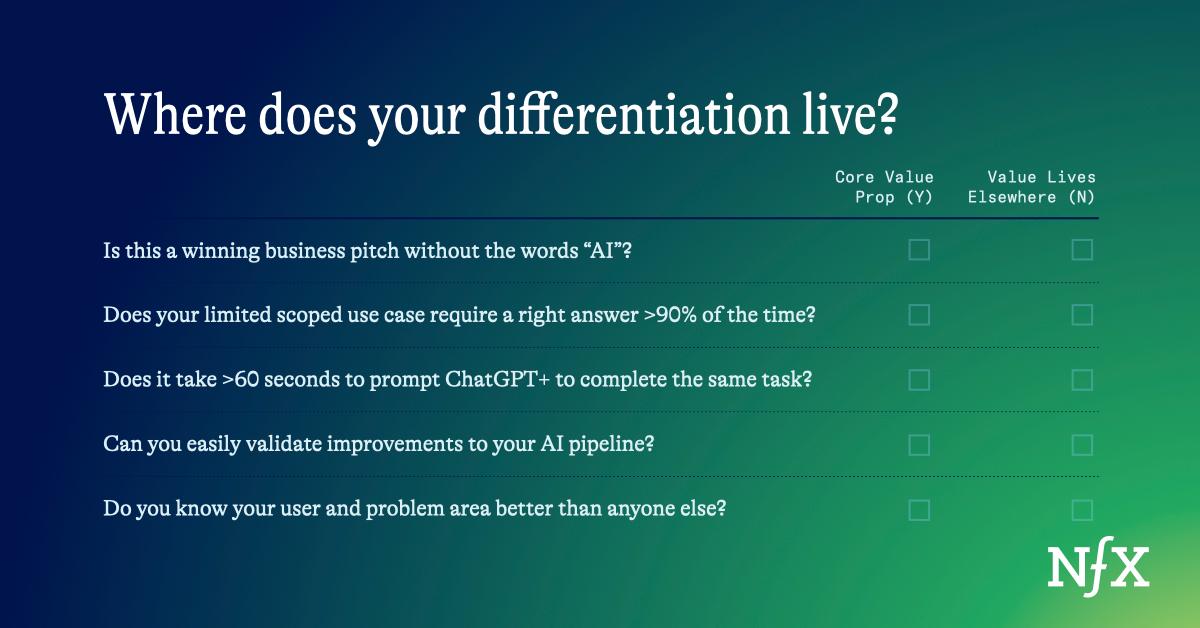

The first note is simple and the most important. If you took out references to AI in your pitch deck, is this still a good business?

AI may be vital to how you are solving a problem or scaling your solution, but for this test, treat it as a black box. If you are building a product that users love and you are defensible in something other than your AI, congratulations. (And come talk to us).

Focus on how the cool AI under the hood relates back to the core business value proposition. “ChatGPT for X” is not the business. X is business. From there you can evaluate X with the same systems and approaches that have already been verified. Is X a large enough market? Are people willing to pay for X? Is X defensible in some way?

If you are building a product where your product quality is heavily dependent on generative AI, then other people with access to foundational models (i.e., everyone) can shine just as brightly. On the other hand, if your user experience is unparalleled because you understand your end user better than anyone else, you may be on to something.

Good businesses are good businesses.

Where are you on the Correctness spectrum?

AI models get things wrong. They are probabilistic solutions to problems. In the case of most LLMs, they are guessing the next most probable word. This means that they hallucinate facts, draw wrong conclusions, and lie. As models get smarter and attention windows get larger this issue is getting better, but it will always be an issue.

For you this means a few things. First, consider how often your product can fail at a task and still be valuable. A chatbot that gives medical advice directly to a patient necessitates an essentially perfect correctness bar. A chatbot that gives medical advice to a doctor who simply uses that as one of many tools has a lower bar to clear. Make sure you are considering where your bar is, and that you are serving the right product to the right user with this in mind.

Focusing on the right industry and user persona can even turn this problem into an advantage. In some creative fields where there isn’t necessarily ever a “wrong” answer, these hallucinations can be valuable expressions.

The self-driving car industry has spent the most time working on this correctness problem and frameworks to solve it for complex models and systems. This is commonly referred to as your operational design domain (ODD). By bounding the ODD of your AI, it becomes much easier to test and validate the correctness of your pipeline. For self-driving cars, this means restricting the vehicles to very specific road conditions, maps, and scenarios and simulating millions of driving miles.

The simplest example of this method would be an LLM with a verified ODD of nothing. Any question or task you ask of the model, it would simply respond that it doesn’t know how to answer or proceed. “I don’t know” isn’t particularly helpful, but it is at least never wrong!

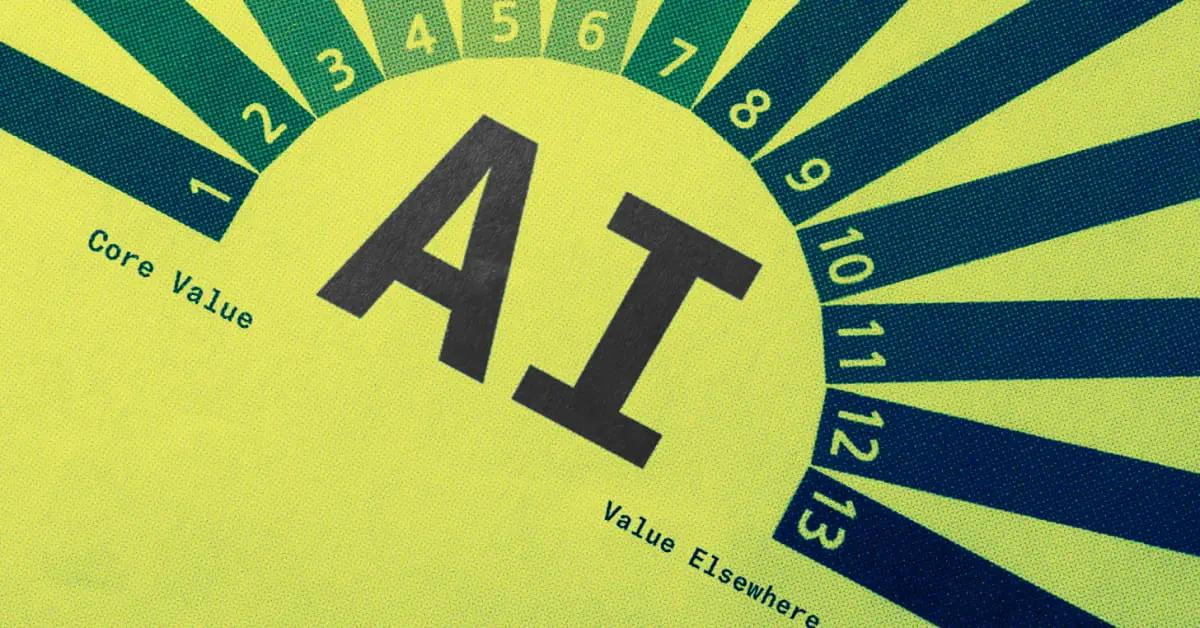

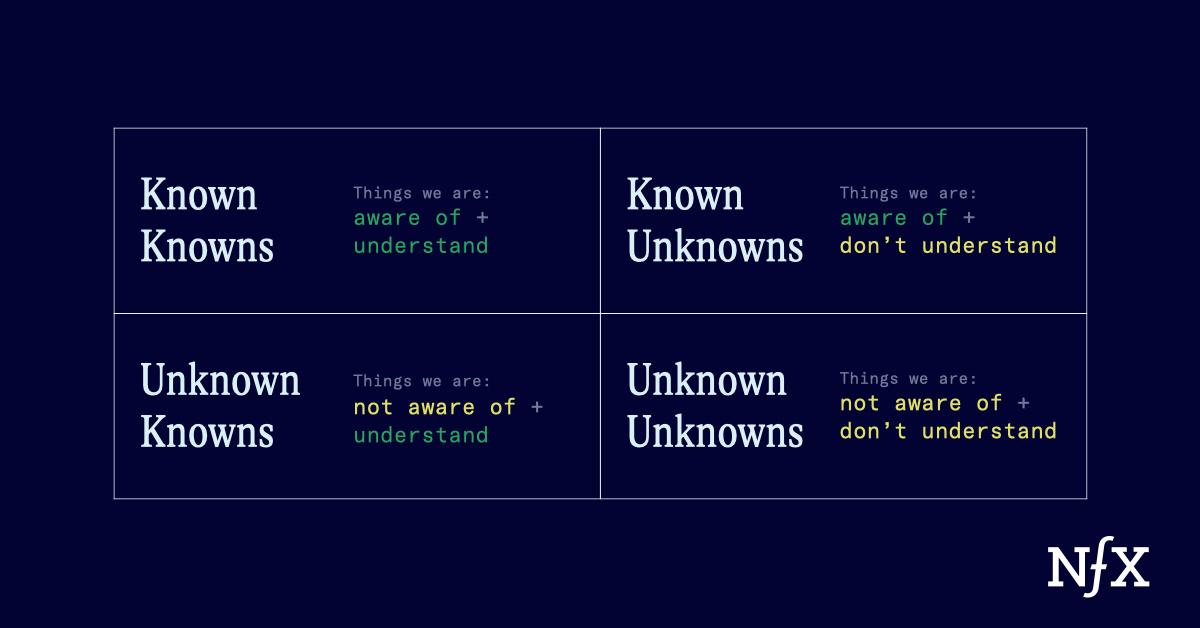

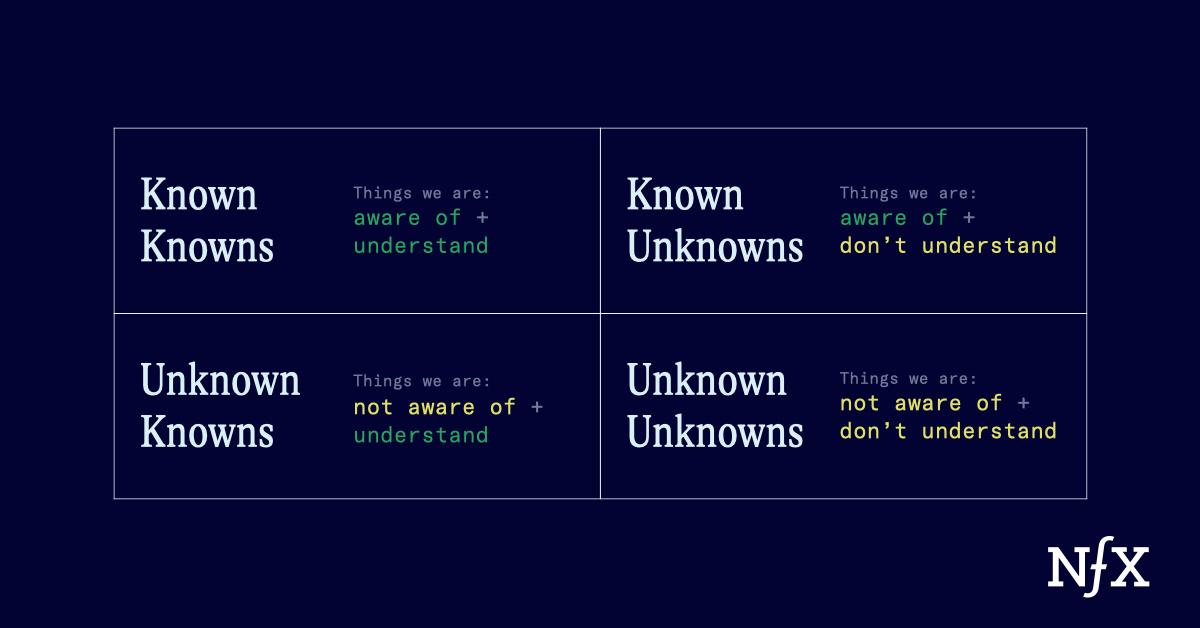

As Donald Rumsfeld said: “There are known unknowns; that is to say we know there are some things we do not know. But there are also unknown unknowns—the ones we don’t know we don’t know… it is the latter category that tends to be the difficult ones.”

If you can define all of your “knowns” and build guardrails to prevent any “unknown unknowns” then you can achieve high bars of correctness for specific use cases.

It’s not easy and it is very case specific, but it is possible to define an ODD and restrict your product to that ODD, making businesses that require high levels of correctness for well-scoped uses, fairly unique.

Differentiate your AI pipeline

We are moving further and further into a world where foundational models are king. Without billions of dollars, you shouldn’t expect to build and stay on the cutting edge of AI research.

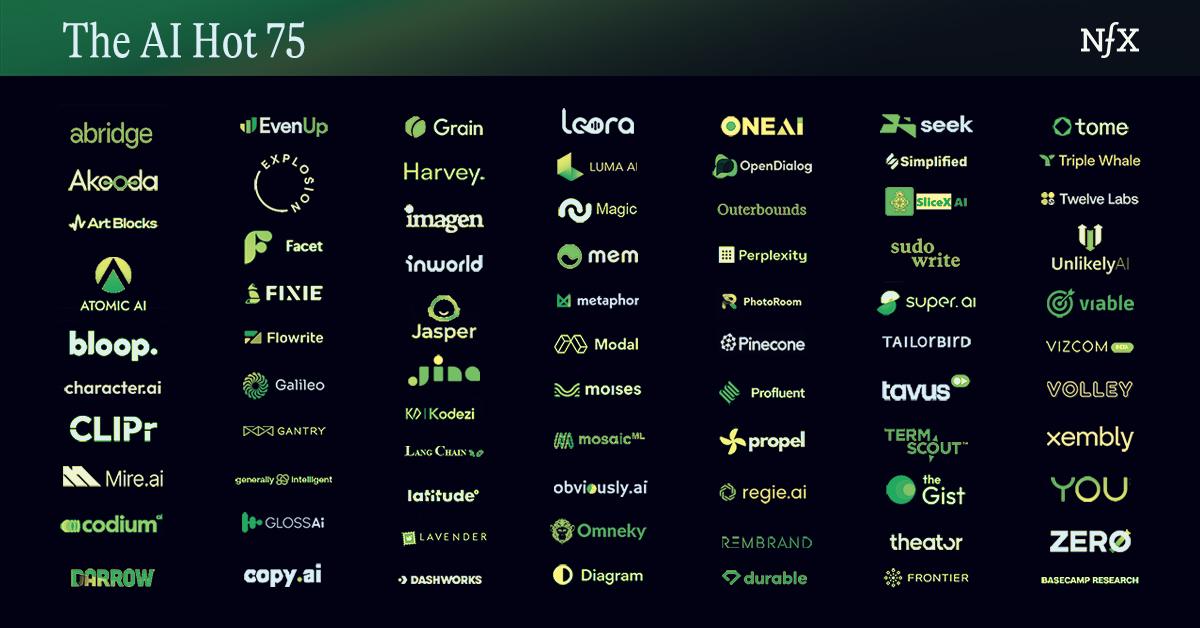

This means you should focus your time on what can be built around these foundational models and how you can optimize them. NFX’s 5 Layer Generative Tech Stack is a great place to start.

Remember that foundational models are just that: a foundation. There are lots of areas around the foundational model inference where you can build solid differentiation in your AI pipelines.

Some examples:

– Picking the right foundational models for the right tasks; like GPTNeox to summarize information to feed into GPT3’s context window can optimize performance and infrastructure costs.

– Prompt engineering and pre-processing data with custom embeddings to significantly boost performance of a specific generation task.

– Post-processing results to enforce the bounds of your ODD.

– Fine-tuning a foundational model to a specific data set to get unique performance.

– Robust infrastructure to support end to end validation pipelines that allow for quick testing, iteration, and improvements to be built into your company’s pipeline.

Evaluating Teams at AI Startups

First: Don’t hire by hype.

Founders, consider that it might be more important for you to hire the infrastructure engineer with 10 years of experience at Cisco, instead of the hot new ML PhD from Stanford. A lot of what we have talked about so far in terms of building differentiation doesn’t actually require a bunch of research-focused ML engineers.

Don’t get us wrong, you need your in-house experts who can read the latest papers and incorporate the technology into your product. And we won’t lie; right now, that ML PhD hire will probably make it a little easier to raise an early stage round. But how quickly and how well you can incorporate those changes usually just boils down to good infrastructure. Key infrastructure hires will make it easier to build and maintain a cutting-edge product and a sustainable business.

It is a difficult balance between being flashy and being sustainable. Being able to do new research and make contributions to advancing AI capabilities is great, but it is difficult to turn it into a repeatable process without a very large research team.

The important lesson here for both founders and investors is that you should think of good infrastructure engineers as some of the best production ML engineers. In fact, ML engineers that have experience working on productionized systems likely spend a significant time working on infrastructure.

Another lesson is that in the generative AI era, your startup doesn’t need as many people as you think. And you might be surprised by the types of people you do need.

A few good litmus tests

Here are some concrete questions that you should be asking of yourself, and that investors certainly will be asking you.

Q: Will foundational models eat your lunch?

- What are you spending time on that will only get better when you drop in new foundational models?

- What are you building in your AI pipeline around calls to foundational models that you can patent?

Q: If someone else had the same idea and had access to the same models (they do) what are you going to build that is unique, hard, and that they cannot do?

- Does the differentiation live in pre-processing, post-processing, testing pipelines, etc…

- Or does it live outside of your tech stack in your user experience, business model, or problem focus?

Q: How often do you need to give your user the correct answer for the product to be viable?

- How are you defining your ODD and building guardrails around your known unknowns and unknown unknowns?

- How are you measuring correctness?

Q: How long would it take for a user to do the same task as your core product, with ChatGPT+?

- Is it only a matter of knowing the right prompt to add in?

- Does it require a substantial amount of setup like copying and pasting in other documents?

- Is it possible at all?

Q: How do you validate that a change in your AI pipeline improves your product, and how long does that validation take?

- What metric are you using to measure improvements and what inherent biases does it have?

- Is your process manual or automated?

- If automated, does your validation pipeline have semantic meaning / when your metrics change is clear why?

Q: Why are you the right team to do this?

- How well do you know your customer persona?

- Do you have experience standing up and optimizing production ML infrastructure?

- Do you understand how to evaluate and test the strengths and weaknesses of AI models?

As Founders ourselves, we respect your time. That’s why we built BriefLink, a new software tool that minimizes the upfront time of getting the VC meeting. Simply tell us about your company in 9 easy questions, and you’ll hear from us if it’s a fit.